What you need to know

- Google announced that it will roll out a new AI note in the details page of images in the Photos app.

- If someone has used features like Magic Editor or Magic Eraser, this AI note will inform people of its use and it will credit “Google AI.”

- This feature is slated to begin “next week,” but Google says even its non-generative AI features are included.

Awareness about what’s genuine or AI-generated is taking center stage for Google and your photos.

Today (Oct 24), Google announced that it’s giving users more information about whether or not a photo has been edited with its AI software. This move is tucked behind the company’s interest in being more transparent with its users exchanging photos on its platform. Google states, “starting next week,” the Photos app will detail if an image had been previously edited with one of its AI tools.

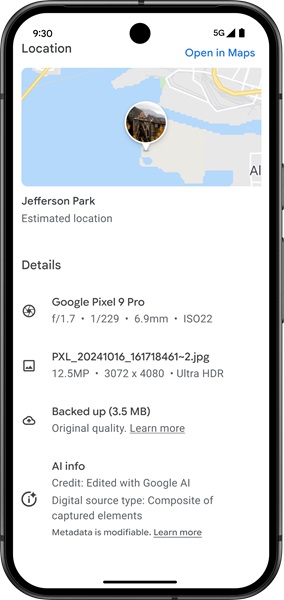

When surfacing “more information” about a photo, the bottom of that page will contain an “AI Info” box. That space will “credit” Google’s AI, so users know its Gen AI tools had a hand in the creation they’re viewing.

This AI note will detail all of Google’s useful photography functions, even the ones it deems “non-generative.” Those features include images taken with “Add Me” or “Best Take.” Google states that while these don’t use AI to alter the photo, it will still inform others that some form of technical wizardry was used to capture it.

Users should be aware that these AI notes will be placed on photos edited using Google’s Magic Editor, Magic Eraser, and Zoom Enhance.

Thanks to Google following the IPTC’s standards (International Press Telecommunications Council), those editing tools already have metadata to indicate Gen AI use. However, this urge for transparency makes it more visible to every user.

Users may be in for more of this “transparency” in the future. Google teased “this work is not done, and we’ll continue gathering feedback.” The company also mentioned it would continue to look at alternative solutions and methods to increase transparency about Gen AI creations. Moreover, Google cites its AI Principals’ guidance as to why it’s taking these extra steps to be more “responsible.”

We’ve seen something similar to this announced with another of Google’s platforms: YouTube. In March, YouTube detailed its new “altered or synthetic” content disclosure that all creators are required to fill out when uploading videos. YouTube states that if any portion of their video is altered with AI tools, their video will receive a label to inform viewers.

Creators cannot remove this label after disclosing that their video has been altered using digital means. However, content creators don’t have to worry if they’ve used to generate captions or scripts for their videos.