What you need to know

- Google announced that “Ask Photos” in the Photos app has now entered early access and users can begin signing up for its waitlist.

- Ask Photos leverages Gemini to discover content stored in your gallery when given a descriptive query.

- Google Photos is also rolling out an improved search experience for Android and iOS users.

Google details some new things stable app users and testers can expect from Photos.

As part of Google Labs’ AI testing environment, the company states in a post that users can get their hands on “Ask Photos.” This new feature in Google Photos lets users leverage the AI model Gemini for questions about their stored content. The post adds that Gemini will “understand the context” of your photo gallery, meaning it should recognize “important” people, food, and more.

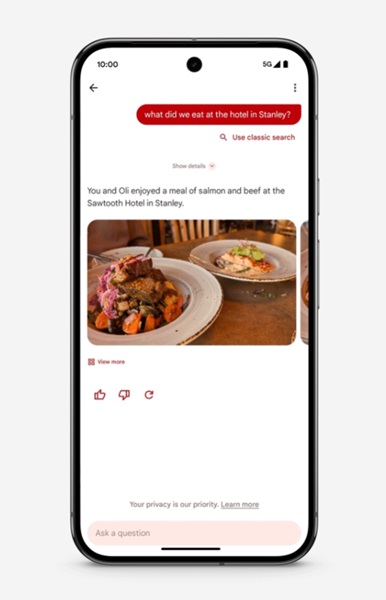

Users can get descriptive during their queries to Gemini in Photos. Google’s provided example shows a user asking about what they ate at a hotel in a specific town (Stanely). After a brief moment, Ask Photos will scour your photos before surfacing what it believes fits your question.

Google states Ask Photos can even understand items in your content like camping gear. However, the AI search could get things wrong, in which case users are encouraged to offer “extra clues or details” to help it.

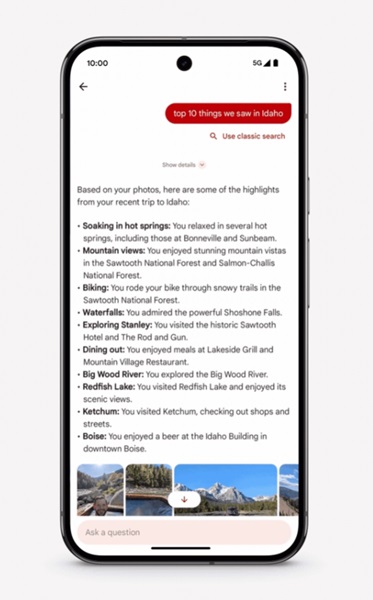

Aside from searching for a singular photo, Ask Photos can create a collection of images from a recent trip. For example, Google says a user could ask “what were the top 10 things we saw in Idaho.” Ask Photos will go through that trip before surfacing what could fit your query’s criteria.

It’s worth mentioning that some of your questions given to Ask Photos may be taken by Google to train the AI. The company states it will protect your privacy by “disconnecting” the query from your account, ensuring your anonymity during review.

Users interested can join the waitlist for Ask Photos today (September 5) and see if they can snag a spot.

Elsewhere, today’s update for Google Photos brings an improved search experience for Android and iOS users. The post highlights the app’s ability to understand “everyday language” when searching for a photo. Some examples include “Alice and me laughing” or “Emma painting in the backyard.”

Google has included sorting options such as relevancy or by date to help users find what they’re looking for as the app turns up results.