Most people wouldn’t buy a smart ring for fitness tracking, and for good reason. They’re fantastic, seamless options for passive health data, and surprisingly decent at step counts if you ignore the false positives from gestures. But for fitness tracking, pretty much every brand we’ve tested has given less-than-accurate results, to put it mildly.

So when Oura sold its new Oura Ring 4 as a better fitness option, with its Automatic Activity Detection and improved real-time HR data during workouts, I bullied our Oura reviewer into going for a run and working out to test this claim for himself.

Our managing editor Derrek Lee is building a real collection of smart rings now, with the Oura Ring 4, Amazfit Helio Ring, and RingConn Gen 2. I asked him to work out while wearing each, compared against smartwatches that we’ve tested with reliable optical heart rate accuracy, and see how close the smart rings came to a proper result.

I also slipped on my Ultrahuman Ring Air to see how it performed, while including the fitness test we performed during our Samsung Galaxy Ring review. Here’s what we learned!

Why testing smart ring accuracy is so hard

Before I show the results, I’ll point out that this test won’t be as detailed and graph-happy as my previous fitness tests, like my cheap fitness watch accuracy test or my Garmin v. Coros v. Polar flagship showdown.

Why? Most smart ring apps (besides Amazfit’s Zepp Health) don’t have the tools to export heart rate graphs for specific workouts, like you can with established fitness watch brands. We tried to export Oura’s GPX files from Derrek’s Strava account and it made my usual HR graph tool crash every time I tried to upload it.

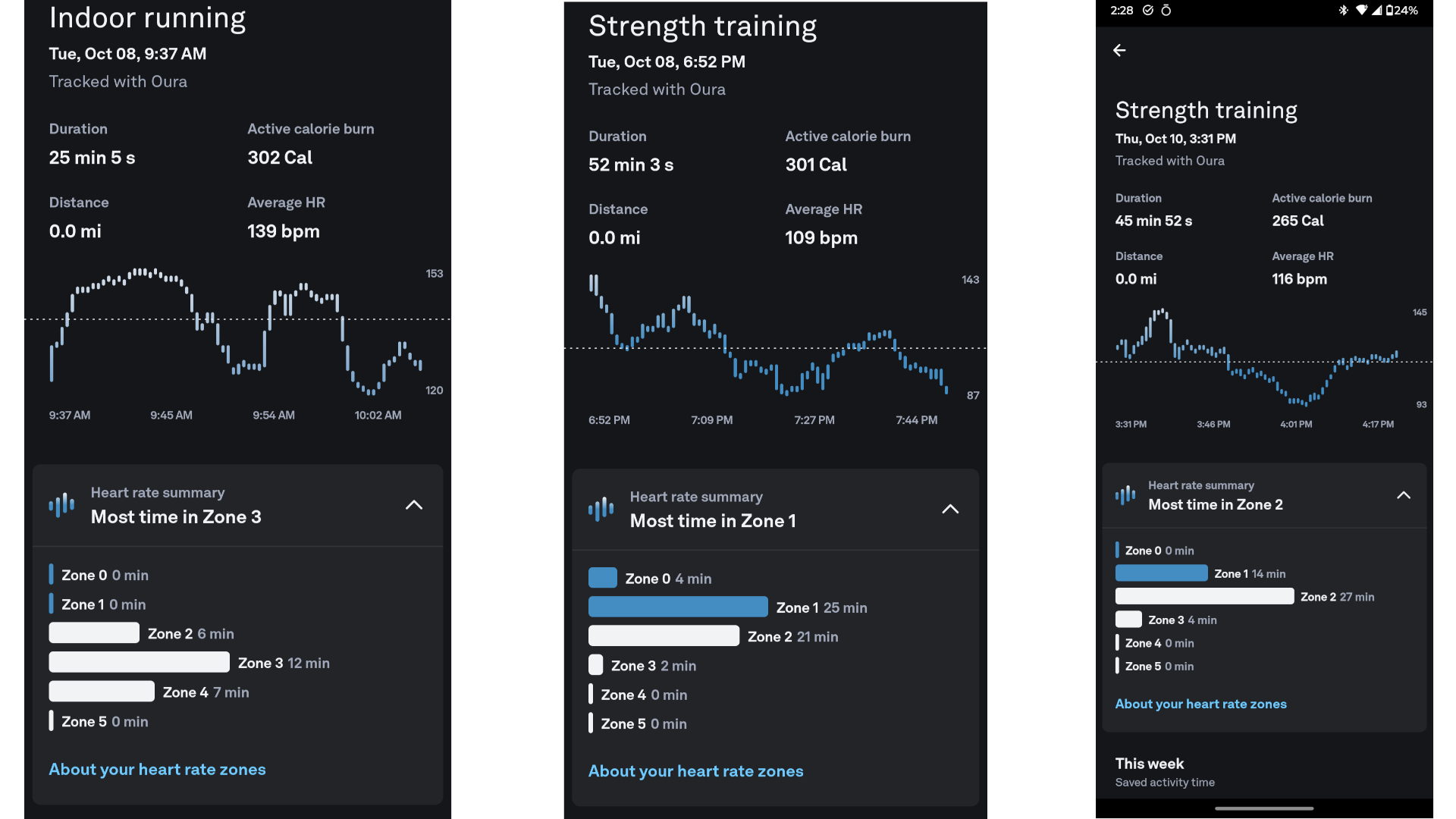

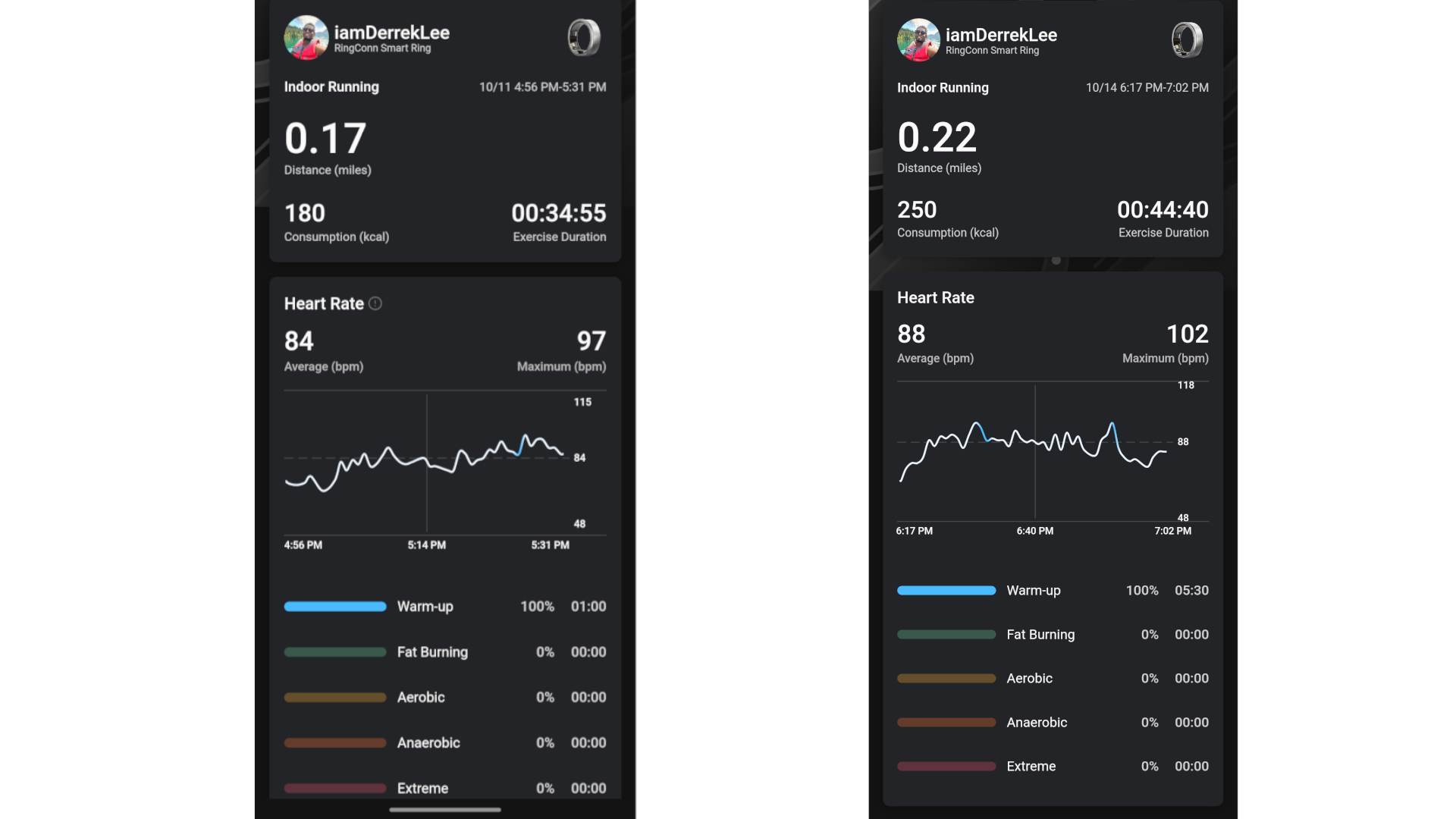

Plus, some of these smart rings don’t even let you trigger a manual workout unless it’s a specific type, like walking or running. For some brands like RingConn, we had to trick the app by saying Derrek was “running” during a gym workout to have it save his heart rate data. Without a workout mode, smart rings don’t sample your heart rate frequently enough for the results to be at all helpful.

Point being, I’m mostly making these comparisons based on heart rate averages and eyeballing HR graphs from slapdash manual workouts. But don’t worry: It’s still enough data to show how middling most of these smart rings are for fitness.

Testing smart ring heart rate accuracy during workouts

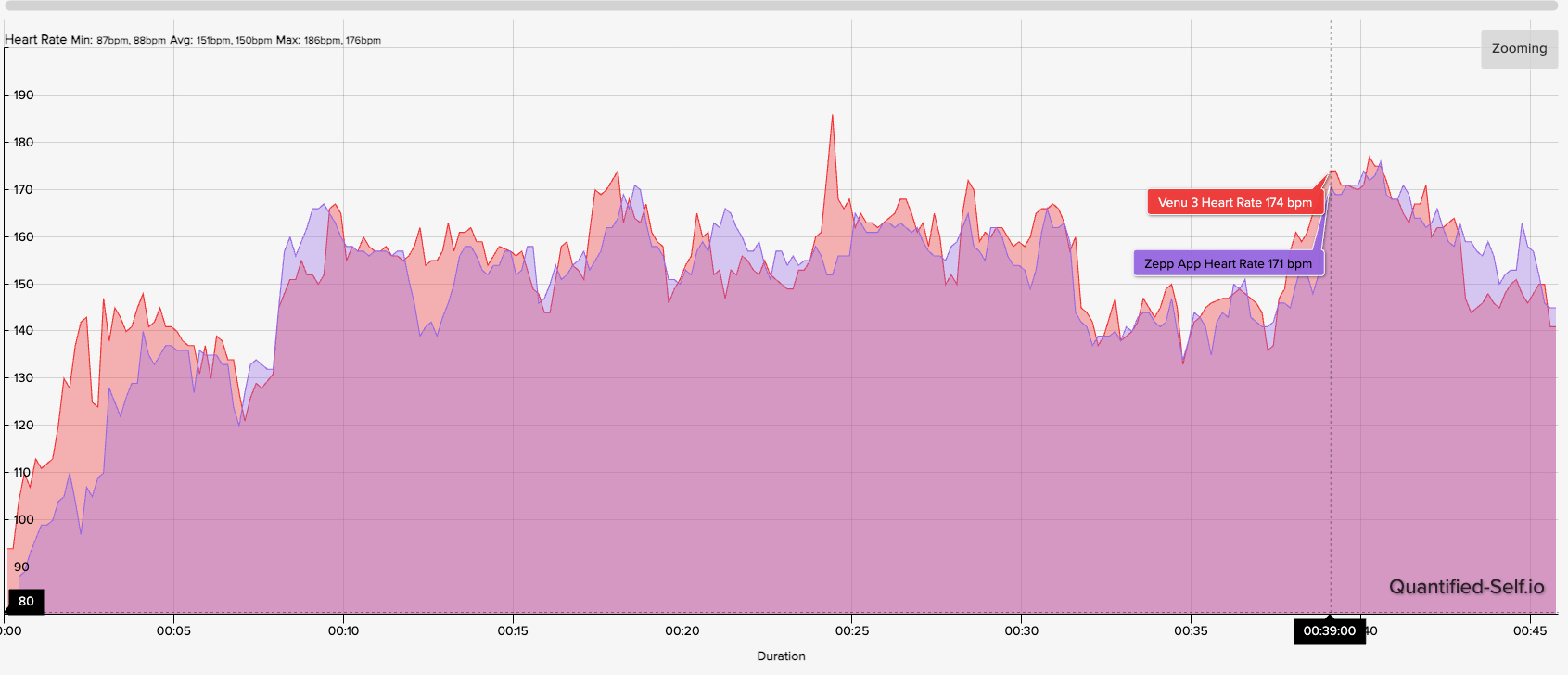

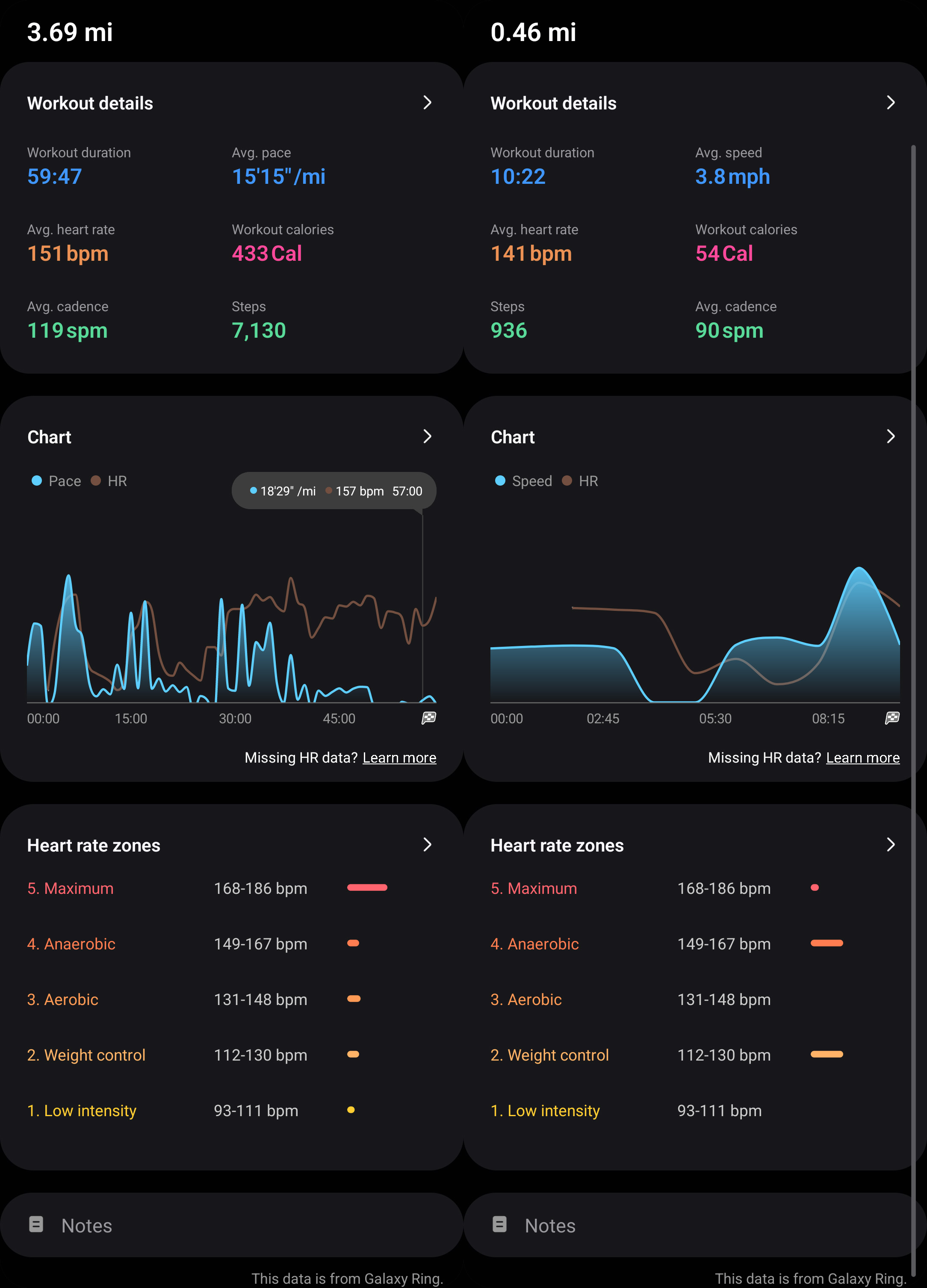

For this smart ring fitness accuracy test, Derrek ran once and completed four gym workouts, wearing two watches — the Garmin Venu 3 and Pixel Watch 3 — and three smart rings from Amazfit, Oura, and RingConn. These were the results:

| Device name | Workout 1 (HR average / max) | Workout 2 | Workout 3 | Workout 4 | Workout 5 |

|---|---|---|---|---|---|

| Garmin Venu 3 | 152 bpm / 179 max | 100 bpm / 137 max | 123 bpm / 158 max | 98 bpm / 127 max | 151 bpm / 186 max |

| Google Pixel Watch 3 | 148 bpm / 178 max | 103 bpm / 150 max | 123 bpm / 160 max | 104 bpm / 140 max | 148 bpm / 172 max |

| Amazfit Helio Ring | 148 bpm / 175 max | 103 bpm / 145 max | 123 bpm / 153 max | 103 bpm / 128 max | 150 bpm / 176 max |

| Oura Ring 4 | 139 bpm / 153 max | 109 bpm / 143 max | 116 bpm / 145 max | 102 bpm / 116 max | 95 bpm / 109 max |

| RingConn Gen 2 | 134 bpm / 164 max | 83 bpm / 101 max | 88 bpm / 117 max | 84 bpm / 97 max | 88 bpm / 102 max |

Without a proper chest or arm strap, I can’t say for certain whether the Venu 3 or Pixel Watch 3 was more accurate for Derrek; the Venu 3 did very well in my review compared to a COROS band, while I gave the Pixel Watch 3 a “B” for accuracy in its review, generally extremely accurate but struggling in the 160+ bpm range. Still, you can see that both match each other fairly closely, as a benchmark for what wrist-based optical sensors typically offer for accuracy.

Now compare these three smart rings’ massive gap in results. You’ll see that only the Amazfit Helio Ring is consistently accurate — especially if we flip a coin and assume Google is more accurate than Garmin here. Its averages are very much on point, and while its max-HR data falls short, it’s not as underestimated as Oura or RingConn.

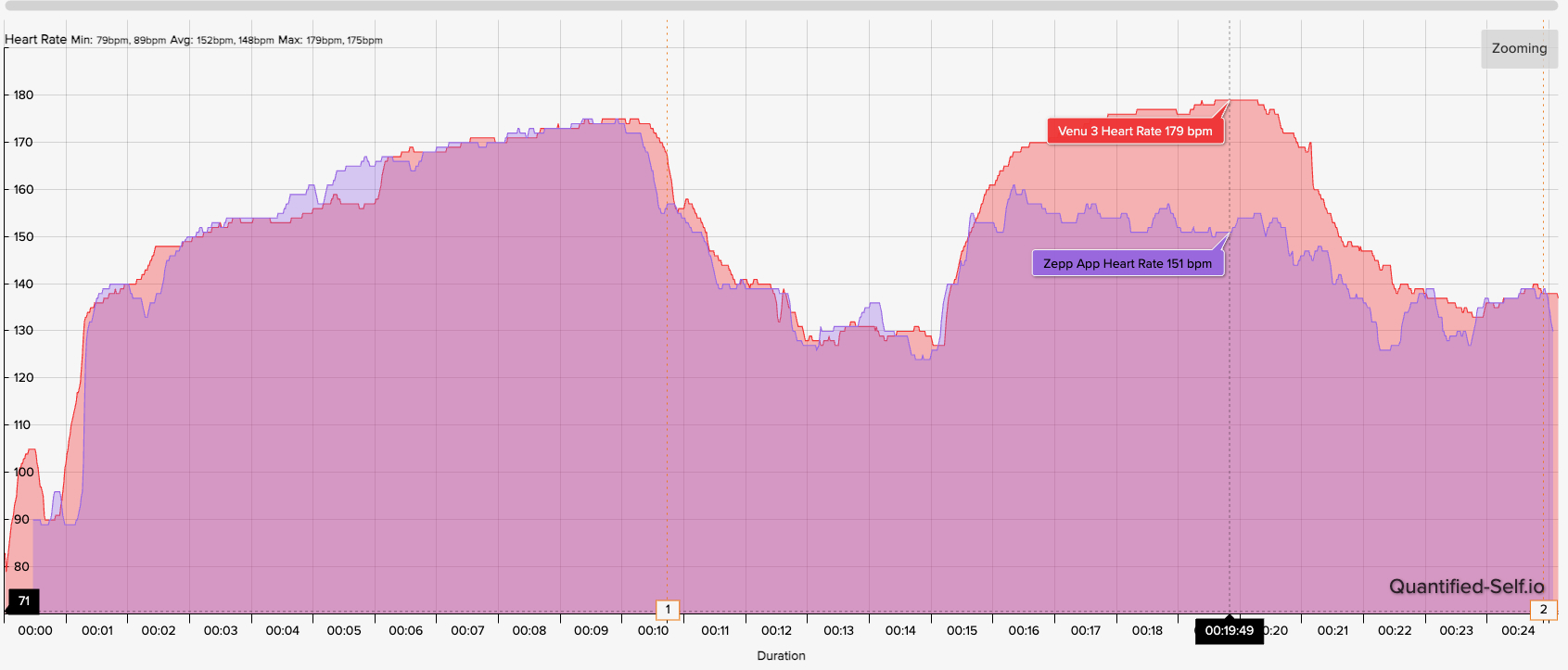

It’s not perfect, of course. You can see in the two heart rate graphs below that it falls well short of Garmin for an extended stretch once Derrek’s heart rate reaches certain heights; in the second graph, it’s always close but tends to show small valleys where Garmin peaks. But overall, it’s closer than I expected from any smart ring.

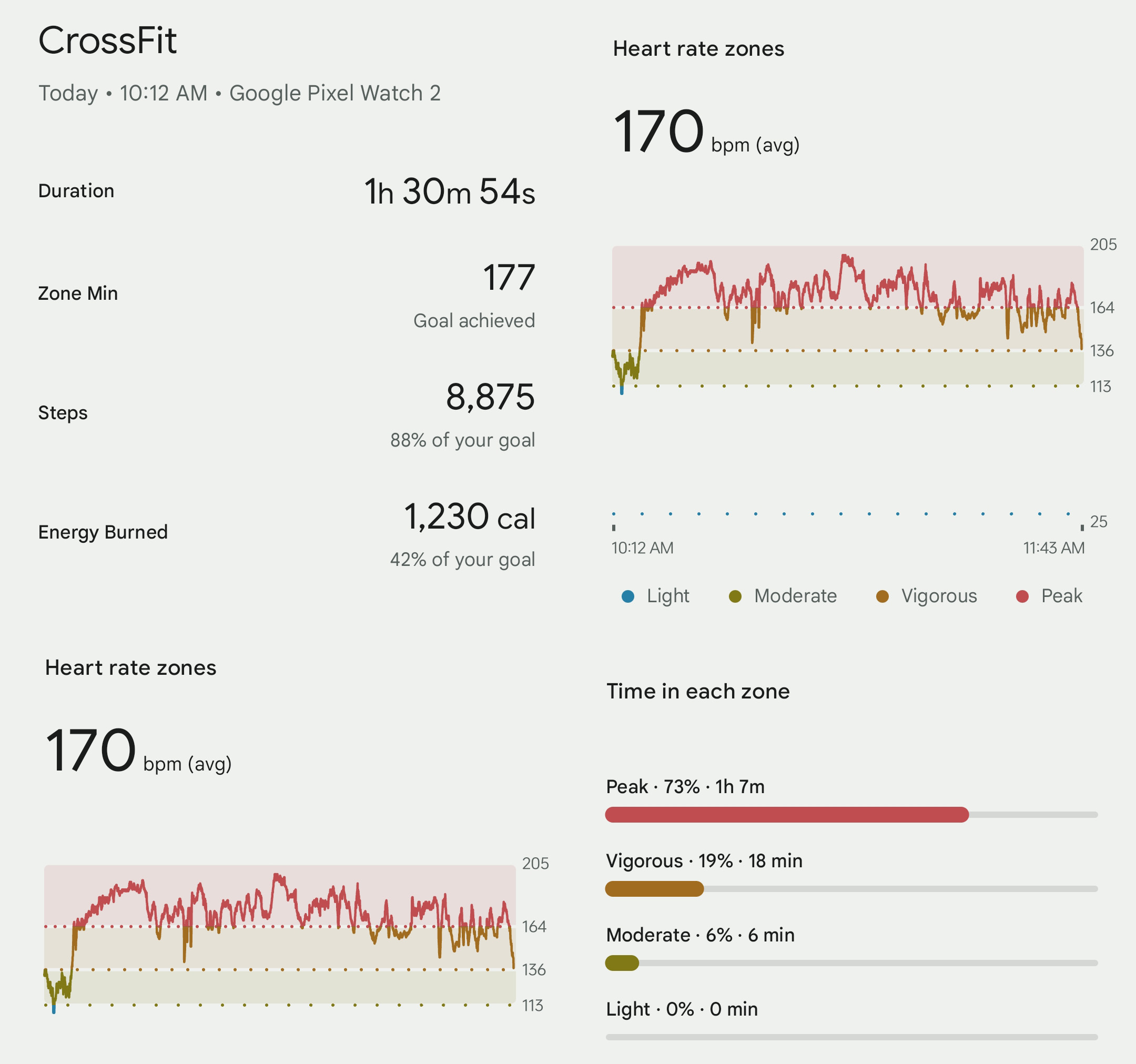

Oura doesn’t do badly for low-aerobic workouts in the 100–130 range, but the higher Derrek’s heart rate climbed, the more difficulties the Oura Ring 4 had. In the last workout, the heart rate chart showed a massive 60–70 bpm gap for most of the workout.

RingConn’s stats are so far off that Derrek speculated that the issue might be that he was forced to wear it on his ring finger instead of his usual index finger (he only has so many fingers, after all), leaving it too loose for a proper reading. That might explain the issue, so we can give RingConn a tentative pass here, while still keeping the data for now.

I will point out that if you wear a smartwatch too loosely or too tightly, your results will be skewed, too…but not this much. It goes to show how important a smart ring’s fit is for proper results.

Nick and I own the Galaxy Ring and Ultrahuman Ring Air, respectively, with no other smart rings. So our tests are more straightforward, showing how they compare against our smartwatches of choice.

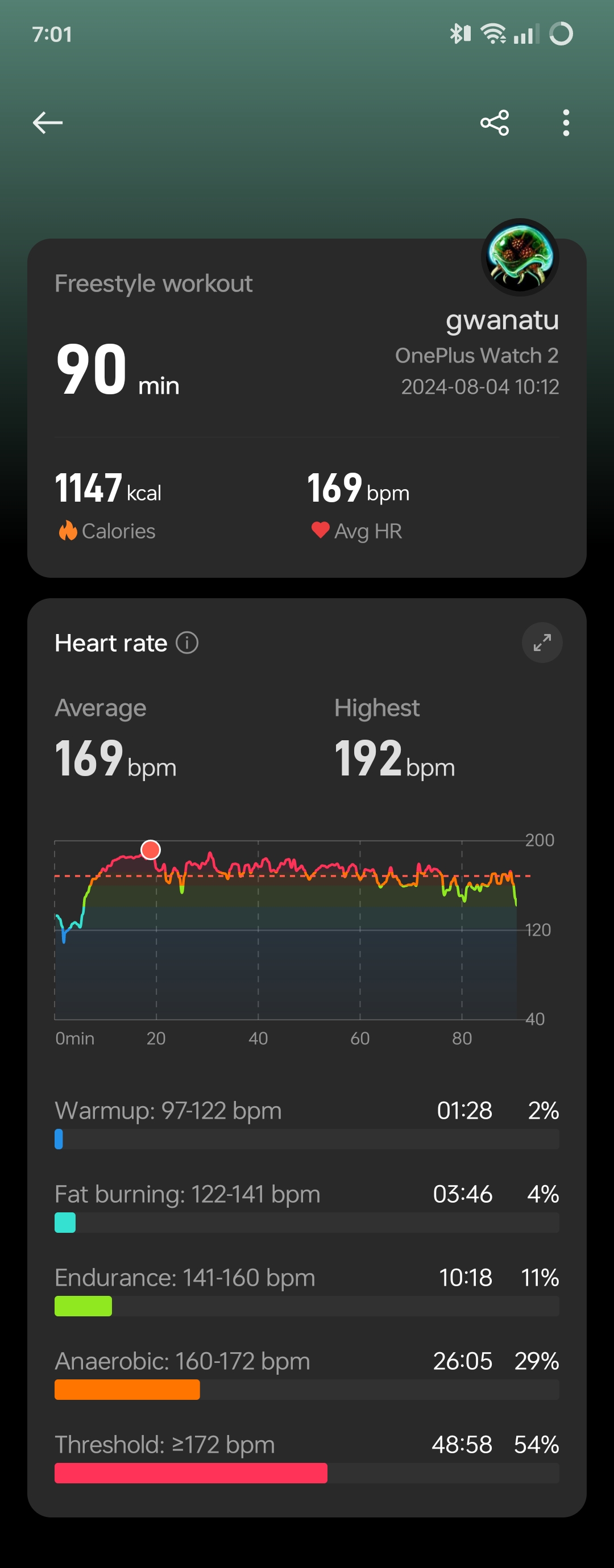

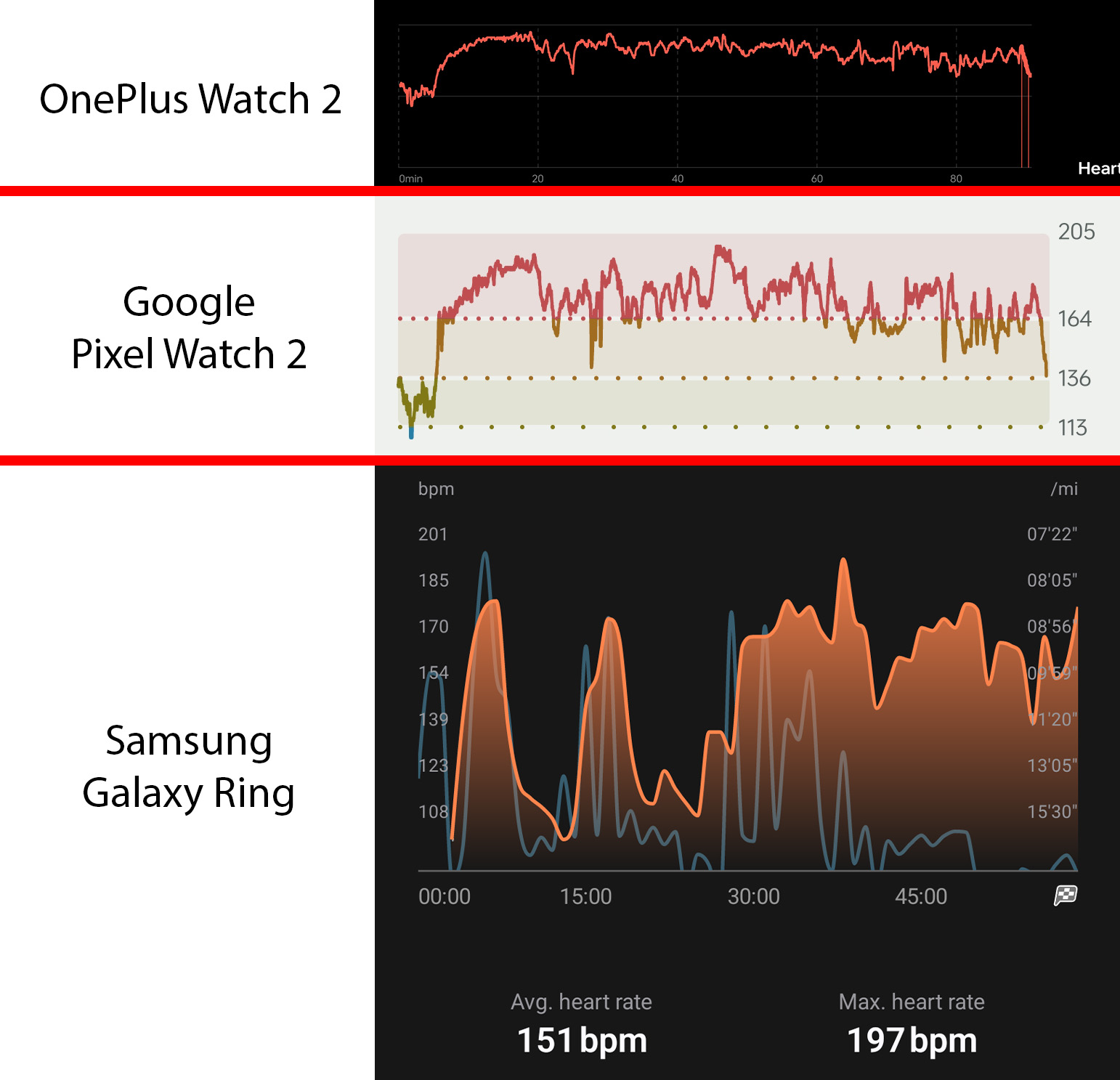

Nick already wrote about the Galaxy Ring’s fitness struggles in his review: The Pixel Watch 2 and OnePlus Watch 2 averaged 170 and 169 bpm during a hard Spartan Race; the Galaxy Ring averaged under 150 bpm, randomly splitting the race across two activities.

As for my Ultrahuman Ring Air, it still lists its Workout Mode as in Beta about half a year after I finished my review, and the results are still wonky. Unlike other smart rings that severely underestimate your active heart rate, Ultrahuman told me my heart rate average was 182 bpm for a track workout when Garmin said it was 168 bpm. A couple of days later, it told me my average during a light run was 153 bpm; Garmin registered 143 bpm and said 153 bpm was my max.

Does smart ring fitness accuracy matter?

As I said from the beginning, most athletes won’t buy a smart ring specifically for workouts. Runners and cyclists want a watch for its GPS and screen data, and while you can lift weights while wearing a smart ring, it can distract from your grip and get scratched up unless you attach a silicone cover.

Still, even if people don’t expect a smart ring to be perfect, they want ballpark accuracy so they can keep track of burned calories. And since brands like Oura and Ultrahuman try to estimate your VO2 Max or Cardio Capacity, it’s important that they do so with accurate data instead of inadvertently misleading people.

I came into this test assuming that the new Oura Ring would sweep away the competition, but you know what they say about assuming! Instead, it’s the Amazfit Helio Ring that won the hardware accuracy contest. And since Amazfit recently discounted it to $199 and made its pricey subscriptions free, the Helio Ring has become more appealing.

On the other hand, the Helio Ring has unresolved problems like the limited number of sizes (three), short battery life (four days), and lack of automatic workout detection. You might still prefer an Oura Ring with its middling accuracy because you don’t have to manually start a workout in the app every time you run or lift weights.

In other words, all of the best smart rings have work to do on the fitness front, but maybe they’re close enough for everyday athletes not to mind the accuracy gaps. Either that or they’ll just keep using their fitness watches instead and only use smart rings for health and sleep data.